Host Section

Initial configuration options specific to the installer, including how ESS should be deployed.

The first section of the ESS installer GUI is the Host section, here you will configure essential details of how ESS will be installed including; deployment type; subscription credentials; PostgreSQL to use; and whether or not your setup is airgapped.

Settings configured via the UI in this section will mainly be saved to your cluster.yml. If performing a Kubernetes deployment, you will also be able to config Host Admin settings which will save configuration into both internal.yml and deployment.yml.

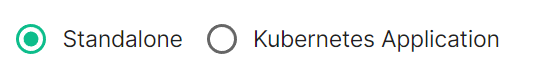

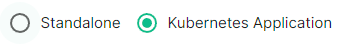

Depending on your environment you will need to select either Standalone or Kubernetes Application. Standalone will install microk8s locally on your machine, and deploy to it so all pods are running locally on the host machine. Kubernetes Application will deploy to your Kubernetes infrastructure in a context you will need to have already setup via your kube config.

Deployment (Standalone)

Install

Config Example

spec:

connectivity:

dockerhub:

password: example

username: example

install:

emsImageStore:

password: example

username: example

webhooks:

caPassphrase: example

# Options unique to selecting Standalone

certManager:

adminEmail: example@Dexample.com

microk8s:

dnsResolvers:

- 8.8.8.8

- 8.8.4.4

postgresInCluster:

hostPath: /data/postgres

passwordsSeed: example

An example of the cluster.yml config generated when selecting Standalone, note that no specific flag is used within the config to specify selecting between Standalone or Kubernetes. If you choose to manually configure ESS bypassing the GUI, ensure only config options specific to how you wish to deploy are provided.

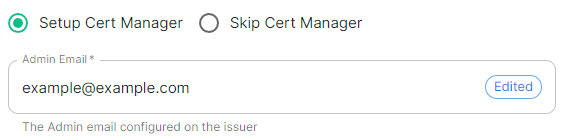

Cert Manager

Config Example

spec:

install:

certManager:

adminEmail: example@example.com

EMS Image Store

Config Example

spec:

install:

emsImageStore:

password: token

username: test

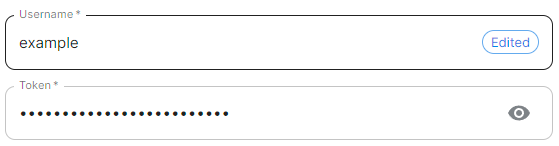

MicroK8s

Config Example

spec:

install:

microk8s:

persistentVolumesPath: /data/changed-location

registrySize: 25Gi

what do these do? (presumedly control where microk8s data is stored), are there any known caveats to changing we should mention, same question for the registry size.

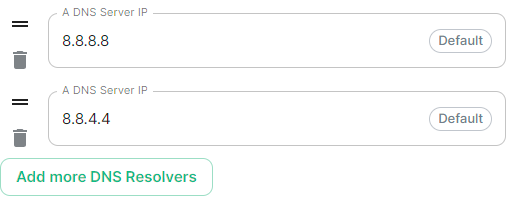

DNS Resolvers

Config Example

spec:

install:

microk8s:

dnsResolvers:

- 8.8.8.8

- 8.8.4.4

Defaulting to 8.8.8.8 and 8.8.4.4, the DNS server IPs set here will be used by all deployed pods. Click Add more DNS Resolvers to add additional entries as required.

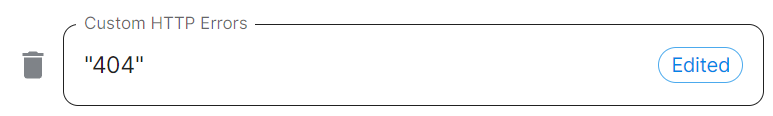

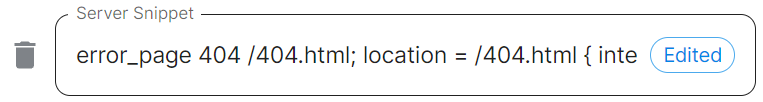

Nginx Extra Configuration

Config Example

spec:

install:

microk8s:

# Not present when disabled

nginxExtraConfiguration:

custom-http-errors: '"404"'

server-snippet: >-

error_page 404 /404.html; location = /404.html { internal; return 200

"<p>Hello World!</p>"; }

As linked via the ESS installer GUI, see the Ingress-Nginx Controller ConfigMaps documentation for the options that can be configured.

For example, if you wanted to replace the standard 404 error page, you could do this using both custom-http-errors and server-snippet. To configure via the installer, simply add the specify custom-http-errors as the Name and click Add to Nginx Extra Configuration, then provide the required value in the newly created field:

Repeat for server-snippet:

The above example is used to explain how to configure the Nginx Extra Configuration, and so is for demonstration purposes only, it is not recommended to use this example config. Ideally your webserver should manage traffic that would otherwise hit a 404 being served by ESS.

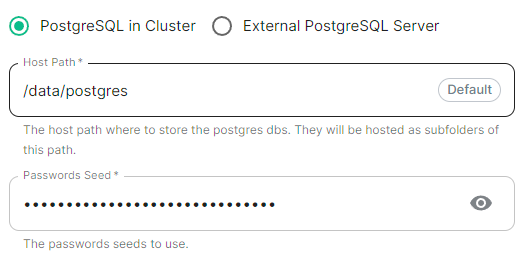

PostgreSQL in Cluster

Config Example

spec:

install:

microk8s:

# postgresInCluster: {} # If disabled

postgresInCluster:

hostPath: /data/postgres

passwordsSeed: example

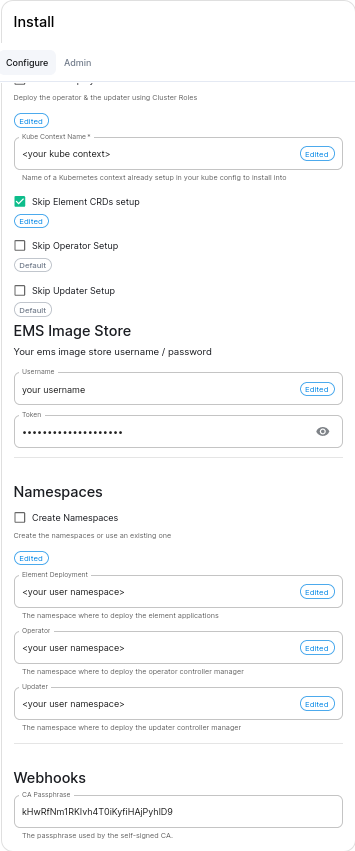

Deployment (Kubernetes Application)

Install

Config Example

spec:

connectivity:

dockerhub:

password: example

username: example

install:

emsImageStore:

password: example

username: example

webhooks:

caPassphrase: example

# Options unique to selecting Standalone

clusterDeployment: true

kubeContextName: example

namespaces: {}

skipElementCrdsSetup: false

skipOperatorSetup: false

skipUpdaterSetup: false

An example of the cluster.yml config generated when selecting Kubernetes, note that no specific flag is used within the config to specify selecting between Standalone or Kubernetes. If you choose to manually configure ESS bypassing the GUI, ensure only config options specific to how you wish to deploy are provided.

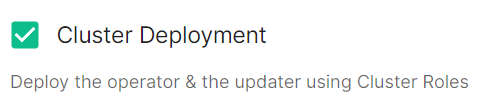

Cluster Deployment

Config Example

spec:

install:

clusterDeployment: true

Deploy the operator & the updater using Cluster Roles.

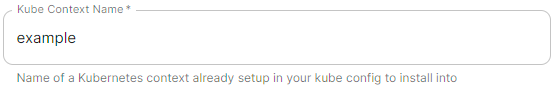

Kube Context Name

Config Example

spec:

install:

kubeContextName: example

The name of the Kubernetes context you have already setup that ESS should be deployed into.

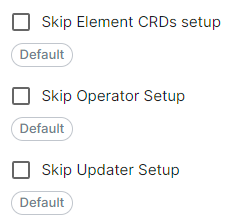

Skip Setup Options

Config Example

spec:

install:

skipElementCrdsSetup: false

skipOperatorSetup: false

skipUpdaterSetup: false

Selecting these will allow you to skip the setup of the Element CRDs, Operator and Updater as required.

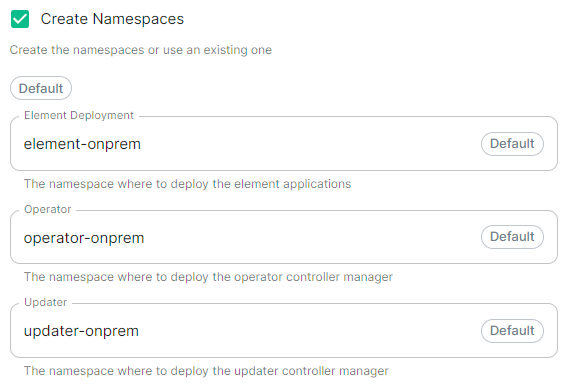

Namespaces

Config Example

spec:

install:

# namespaces: {} # When left as default namespaces

# namespaces: # When `Create Namespaces` is disabled

# createNamespaces: false

namespaces: # When custom namespaces are provided

elementDeployment: element-example # Omit any that should remain as default

operator: operator-example

updater: updater-example

Allows you to specify the namespaces you wish to deploy into, with the additional option to create them if they don't exist.

Namespace-scoped Deployments

Namespace-scoped deployments in Kubernetes offer a way to organize and manage resources within specific namespaces rather than globally across the entire cluster.

Preparing the Cluster

Installing the Helm Chart Repositories

The first step is to start on a machine with helm v3 installed and configured with your kubernetes cluster and pull down the two charts that you will need.

First, let's add the element-updater repository to helm:

helm repo add element-updater https://registry.element.io/helm/element-updater --username

ems_image_store_username --password 'ems_image_store_token'

Replace ems_image_store_username and ems_image_store_token with the values provided to you by Element.

Secondly, let's add the element-operator repository to helm:

helm repo add element-operator https://registry.element.io/helm/element-operator --username ems_image_store_username --password 'ems_image_store_token'

Replace ems_image_store_username and ems_image_store_token with the values provided to you by Element.

Now that we have the repositories configured, we can verify this by:

helm repo list

and should see the following in that output:

NAME URL

element-operator https://registry.element.io/helm/element-operator

element-updater https://registry.element.io/helm/element-updater

Deploy the CRDs

Write the following values.yaml file:

clusterDeployment: true

deployCrds: true

deployCrdRoles: true

deployManager: false

To install the CRDS with the helm charts, simply run:

helm install element-updater element-updater/element-updater -f values.yaml

helm install element-operator element-operator/element-operator -f values.yaml

Now at this point, you should have the following two CRDs available:

[user@helm ~]$ kubectl get crds | grep element.io

elementwebs.matrix.element.io 2023-10-11T13:23:14Z

wellknowndelegations.matrix.element.io 2023-10-11T13:23:14Z

elementcalls.matrix.element.io 2023-10-11T13:23:14Z

hydrogens.matrix.element.io 2023-10-11T13:23:14Z

mautrixtelegrams.matrix.element.io 2023-10-11T13:23:14Z

sydents.matrix.element.io 2023-10-11T13:23:14Z

synapseusers.matrix.element.io 2023-10-11T13:23:14Z

bifrosts.matrix.element.io 2023-10-11T13:23:14Z

lowbandwidths.matrix.element.io 2023-10-11T13:23:14Z

synapsemoduleconfigs.matrix.element.io 2023-10-11T13:23:14Z

matrixauthenticationservices.matrix.element.io 2023-10-11T13:23:14Z

ircbridges.matrix.element.io 2023-10-11T13:23:14Z

slidingsyncs.matrix.element.io 2023-10-11T13:23:14Z

securebordergateways.matrix.element.io 2023-10-11T13:23:14Z

hookshots.matrix.element.io 2023-10-11T13:23:14Z

matrixcontentscanners.matrix.element.io 2023-10-11T13:23:14Z

sygnals.matrix.element.io 2023-10-11T13:23:14Z

sipbridges.matrix.element.io 2023-10-11T13:23:14Z

livekits.matrix.element.io 2023-10-11T13:23:14Z

integrators.matrix.element.io 2023-10-11T13:23:14Z

jitsis.matrix.element.io 2023-10-11T13:23:14Z

mautrixwhatsapps.matrix.element.io 2023-11-15T09:03:48Z

synapseadminuis.matrix.element.io 2023-10-11T13:23:14Z

synapses.matrix.element.io 2023-10-11T13:23:14Z

groupsyncs.matrix.element.io 2023-10-11T13:23:14Z

pipes.matrix.element.io 2023-10-11T13:23:14Z

elementdeployments.matrix.element.io 2023-10-11T13:34:25Z

chatterboxes.matrix.element.io 2023-11-21T15:55:59Z

Namespace-scoped role

In the namespace where the ESS deployment will happen, to give a user permissions to deploy ESS, please create the following role and roles bindings:

User role:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ess-additional

rules:

- apiGroups:

- apiextensions.k8s.io

resources:

- customresourcedefinitions

verbs:

- list

- watch

- get

- apiGroups:

- project.openshift.io

resources:

- projects

verbs:

- get

- list

- watch

User roles bindings:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ess-additional

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ess-additional

subjects:

# role subjects which maps to the user or its groups

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ess

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: edit

subjects:

# role subjects which maps to the user or its groups

Once your cluster is prepared, you can setup your namespace-scoped deployment by configuring these settings:

-

Skip Operator Setup.

Unchecked -

Skip Updater Setup.

Unchecked -

Skip Element CRDs Setup.

Checked -

Cluster Deployment.

Unchecked -

Kube Context Name.

Set touser_kube_context_name -

Namespaces.

-

Create Namespaces.

Unchecked -

Operator.

Set tonamespace_to_deploy_ess -

Updater.

Set to same as Operator,namespace_to_deploy_ess -

Element Deployment.

Set to same as Operator,namespace_to_deploy_ess

-

Create Namespaces.

Connectivity

Config Example

spec:

connectivity:

Connected

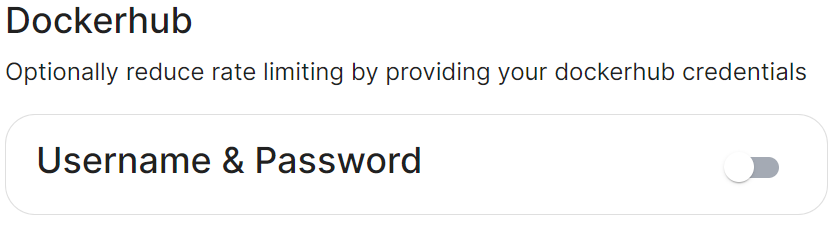

Config Example

spec:

connectivity:

# dockerhub: {} # When Username & Password is disabled per default

dockerhub:

password: password

username: test

Connected means the installer will use the previously provided EMS Image Store credentials to pull the required pod images as part of deployment, optionally, you can specify Dockerhub credentials to reduce potential rate limiting.

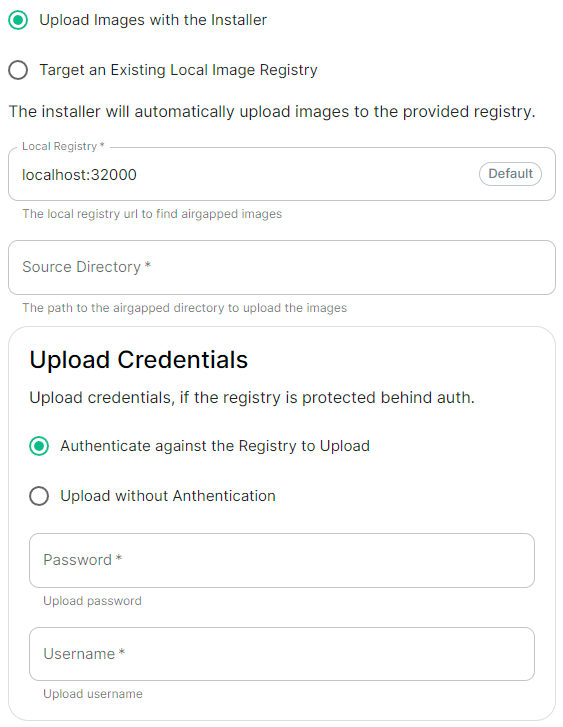

Airgapped

|

|

Config Example

spec:

connectivity:

airgapped:

localRegistry: localhost:32000

sourceDirectory: /home/ubuntu/airgapped/

# uploadCredentials not present if `Target an Existing Local Image Registry` selected

# uploadCredentials: {} # If 'Upload without Anthentication'

uploadCredentials:

password: example

username: example

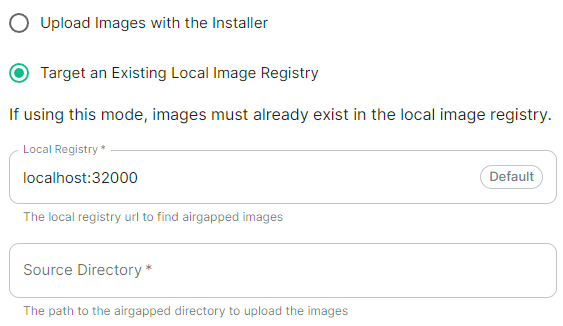

An airgapped environment is any environment in which the running hosts will not have access to the greater internet. This proposes a situation in which these hosts are unable to get access to various needed bits of software from Element and also are unable to share telemetry data back with Element.

Selecting Airgapped means the installer will rely on images stored in a registry local to your environment, by default the installer will host this registry uploading images found within the specified Source Directory, however you can alternatively specify one already present in your environment instead.

Getting setup within an Airgapped environment

Alongside each Installer binary available for download, for those customers with airgapped permissions, is an equivalent airgapped package element-enterprise-installer-airgapped-<version>-gui.tar.gz. Download and copy this archive to the machine running the installer, then use tar -xzvf element-enterprise-installer-airgapped-<version>-gui.tar.gz to extract out its contents, you should see a folder airgapped with the following directories within:

-

pip -

galaxyy -

snaps -

containerd -

images

Copy the full path of the root airgapped folder, for instance, /home/ubuntu/airgapped and paste that into the Source Directory field. Should you ever update the ESS installer binary, you will need to ensure you delete and replace this airgapped folder, with its updated equivalent.

Your airgapped machine will still require access to airgapped linux repositories depending on your OS. If using Red Hat Enterprise Linux, you will also need access to the EPEL repository in your airgapped environment.

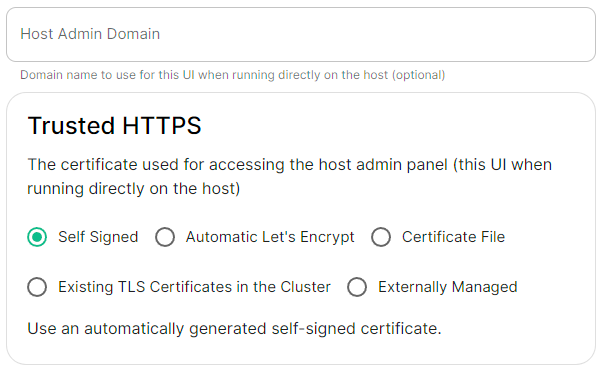

Host Admin

Config Example

-

internal.ymlspec: fqdn: admin.example.com tls: # When selecting `Self Signed` # mode: self-signed # When selecting `Automatic Let's Encrypt` mode: automatic automatic: adminEmail: example@example.com # When selecting `Certificate File` # mode: certfile # certificate: # certFile: "example" # Base64 encoded string from certificate # privateKey: "example" # Base64 encoded string from certificate key # When selecting `Exsiting TLS Certificates in the Cluster` # mode: existing # secretName: example # When selecting `Externally Managed` # mode: external -

deployment.ymlspec: components: synapseAdmin: config: hostOrigin: >- https://admin.example.com,https://admin.example.com:8443

The Host Admin section allows you to configure the domain name and certificates to use when serving the ESS installer GUI, when running directly on the host - changes here will take affect the next time you run the installer.